Participate in our studies! Visit Teng Lab Experiment Participation for details. Welcome to the Cognition, Action, and Neural Dynamics Laboratory We aim to better understand how people perceive, interact with, and move through the world, especially when vision is unavailable. To

Principal Investigator:

Santani TengWhat is echolocation? Sometimes, the surrounding world is too dark and silent for typical vision and hearing. This is true in deep caves, for example, or in murky water where little light penetrates. Animals living in these environments often have the ability to echolocate: They make sounds and listen for their reflections. Like turning on a flashlight in a dark room, echolocation is a way to illuminate objects and spaces actively using sound.

What is echolocation? Sometimes, the surrounding world is too dark and silent for typical vision and hearing. This is true in deep caves, for example, or in murky water where little light penetrates. Animals living in these environments often have the ability to echolocate: They make sounds and listen for their reflections. Like turning on a flashlight in a dark room, echolocation is a way to illuminate objects and spaces actively using sound.

Sonar for people

Using tongue clicks, cane taps or footfalls, some blind humans have demonstrated an ability to use echolocation (also called sonar, or biosonar in living organisms) to sense, interact with, and move around the world. What information does echolocation afford its practitioners? What are the factors contributing to learning it? How broadly is it accessible to blind, and sighted, people? Continuing past work from MIT and UC Berkeley, at Smith-Kettlewell I am investigating these and other questions: what spatial resolution is available to people using echoes to perceive their environments, how well echoes promote orientation and mobility in blind persons, and how this ability is mediated in the brain. Previous work has shown that sighted blindfolded people can readily learn some echolocation tasks with a small amount of training, but that blind practitioners possess a clear expertise advantage, sometimes distinguishing the positions of objects to an angular precision of just a few degrees — about the width of a thumbnail held at arm’s length. Identifying the basic perceptual factors that contribute to this performance could help what might let a novice echolocate like an expert would clarify our understanding of human perception and be a valuable resource for Orientation & Mobility instructors promoting greater independent mobiity in blind travelers.

Assisted echolocation

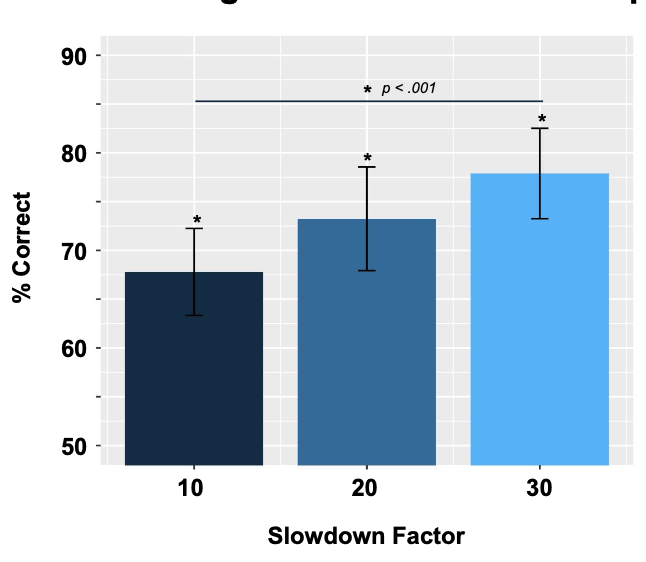

Inspired by both human and non-human echolocators, and driven by advances in computing technology, we are investigating artificial echoes as a perceptual and travel aid. For example, ultrasonic echoes, useful to bats but silent to humans, carry higher-resolution information than audible echoes. An assistive device could make the perceptual advantages of ultrasonic echolocation available to human listeners. Previous work showed that untrained listeners can quickly make spatial judgments using the echoes, and we are working on making artificial echolocation more perceptually useful as well as more ergonomic and convenient.

Neural mechanisms

Echolocation relies on making and perceiving sounds. But in blind expert practitioners, it activates brain regions typically used for vision. Using magnetoencephalography (MEG), electroencephalography (EEG), and magnetic resonance imaging (MRI), this recent line of inquiry seeks to understand how the brain process echo information, separates it from other incoming sounds, and uses it to guide behavior.

Publications

Journal Article

Conference Papers

Presentations/Posters

Centers

Rehabilitation Engineering Research Center

The Center’s research goal is to develop and apply new scientific knowledge and practical, cost-effective devices to better understand and address the real-world problems of blind, visually impaired, and deaf-blind consumers. The RERC has many ongoing R&D projects and collaborative…

People

Collaborators

External

News

Object echolocation project receives Best Poster award at OPAM 29

A Smith-Kettlewell study measuring object information available via echolocation was recognized with a Best Poster award at the 2021 Conference on Object Perception, visual Attention, and visual Memory (OPAM 29).

Smith-Kettlewell Eye Research Institute to Illuminate Adaptive Strategies in Vision Impairment at IMRF Symposium

The Smith-Kettlewell Eye Research Institute played a key role at this year’s International Multisensory Research Forum (IMRF) in Reno, Nevada, beginning June 17, 2024. An all-SKERI symposium “Shifting Sensory Reliance: Adaptive Strategies in Vision Impairment and Blindness.”

SKERI Scientists Present Groundbreaking Research at the 24th Annual Vision Sciences Society Meeting

The Smith-Kettlewell Eye Research Institute (SKERI) is proud to announce its extensive participation in the 24th Annual Meeting of the Vision Sciences Society (VSS), held from May 17 to May 22, 2024. This leading conference, taking place in St. Pete

SKERI Postdoc Haydée García-Lázaro receives the Elsevier Vision Research Travel Award

Congratulations to Dr. Haydée García-Lázaro for receiving the Elsevier Vision Research Travel Award to attend the Vision Sciences Society (VSS) Conference.

SKERI Researchers Bring their Rehabilitation and Accessibility Vision to CSUN Assistive Technology Conference

Smith-Kettlewell’s Rehabilitation Engineering Research Center (RERC) on Blindness and Low Vision, funded by the National Institute on Disability, Independent Living and Rehabilitation Research (NIDILRR ), supports eight projects related to visual impairmen